Post-processing pipeline for kid's soccer photos

Imagine that you break out the DSLR to snap some action shots of your child in one of their first real soccer games. There are some good shots among all of the stuff that you failed to manually focus at 300mm despite your background as a 1st Camera Assistant. However, when you get home you realize that all the images are in RAW ( .CR2 ) format and converting them all to another format is going to be a chore.

( To Ubuntu Desktop's credit, it does preview CR2 files nicely but I need batch capability )

In 2024 I expect that this is problem others have encountered to the extent that this should be covered by existing libraries. ChatGPT suggested a combination of dcraw and imagemagick to put the CR2 inputs through an intermediary PPM* file format and eventually to JPEG outputs. I love bash scripts but was happy to have the help with the syntax of all the file-handling:

#!/bin/bash

# input and output directories

input_dir="/input_images"

output_dir="/output_images"

# ensure output directory exists

mkdir -p "$output_dir"

# Process input CR2 files

for file in "$input_dir"/*.CR2; do

# Extract filename without extension

filename=$(basename -- "$file")

filename_noext="${filename%.*}"

# Convert CR2 to PPM using dcraw

dcraw -c -w "$file" > "$output_dir/$filename_noext.ppm"

# auto-orient while converting to JPEG using ImageMagick

convert -auto-orient "$output_dir/$filename_noext.ppm" "$output_dir/$filename_noext.jpg"

# remove tmp PPM file

rm "$output_dir/$filename_noext.ppm"

done

echo "Image conversions completed."But wait, I don't want to install these libraries on my pristine laptop. Let's get Docker involved. In the following Dockerfile we'll take ubuntu as a base and install our dependencies.

FROM ubuntu

# Install required dependencies

RUN apt-get update && apt-get install -y \

dcraw \

imagemagick \

&& rm -rf /var/lib/apt/lists/*

# Set the working directory

WORKDIR /app

# I'm actually not going to copy the script into the container - see below

#COPY convert_raw.sh /app/convert_raw.sh

#RUN chmod +x /app/convert_raw.sh

# Specify the entry point

ENTRYPOINT ["/app/convert_raw.sh"]Then even though it is a single container application, I still like to make a docker-compose.yml as a way of documenting all the runtime options passed. In this case it's really just the input/output directories, and the script itself. I like to mount my working script into the container at runtime so I can modify it without having to constantly rebuild the image. This pattern works well in development or ad-hoc situations like this

version: '3'

services:

image-converter:

build:

context: .

dockerfile: Dockerfile

container_name: image-converter-container

volumes:

- ./inputs:/input_images

- ./outputs:/output_images

- ./convert_raw.sh:/app/convert_raw.sh # Mount fresh script at runtime!

command: sh -c "/app/convert_raw.sh" Double check the paths to the inputs and outputs. command is used to override ENTRYPOINT from the Dockerfile which is actually redundant in this case. However sometimes when I need to troubleshoot something in the context of the container I'll pass command: tail -f /dev/null to keep the container running and then run a shell inside it with docker exec -it /bin/bash <container_name> My point being that using command in the docker-compose.yml is another way to easily experiment without having to rebuild the image.

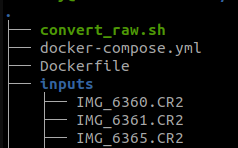

Here is the structure of the docker stuff in relation to the script and source files:

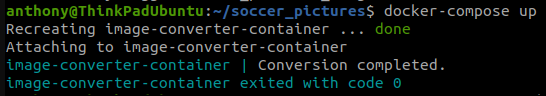

Cross your fingers and: docker-compose up

And just like that, JPEGs totaling 326MB down from 861MB for the original CR2s.

Of course there are a lot more possibilities with these two libraries, but this is the minimum-viable "RAW to JPEG" pipeline.

Stay tuned for turning these into animated GIF frames. Imagemagick can scale and crop down the frames before animating with JSgif ( this step in a Node script )