Virtual machines are awesome, there is no debate about that. They have also become a lot easier to use - a fact that their increasing populariry reinforces.

In this post I'll argue that the tools of virtualization have become so accessible to developers that they provide entirely new, more organized ways to develop complex applications. So let's talk about Docker.

Docker is Containerization

It's true that virtualization and containerization are different animals. I'm not going to get into the differences because it has been explained in detail.

To read the rest of this post, here is all you need to understand:

- Docker is software that allows you to define the configuration and automate the setup of containers, which is are like lightweight virtual machines hosted within your computer.

- A container is an instance of an image, which is the base configuration for a particular environment/app and defined in a Dockerfile

- Multiple containers run simeltaneously ( often providing the various services of an application ), sharing the resources of the host system and communicating within a virtual network.

The primary benefit of this, as the terminology implies, is that separation is created between the host's system and whatever is going on inside the container(s). The term 'sandboxing' playfully describes this containment of the fun.

Containerization is Sanity

Something as 'simple' as keeping multipe versions of mysql/python/node on the same system can quickly lead to a bad time.

Conflicting dependencies are where the nightmare really begins.

Version Control for Everything

A Dockerfile is like a blueprint, and it can get quite complex. Better to have this complexity outlined in one file, rather than just existing as complexity.

Deliverables

A stupid word that gets thrown around a lot, this is sometimes used to describe the final product that is handed off from developers to those responsible for deployment. Especially when these are different people, there are common pitfalls:

- missing/mismatched dependencies

- incorrect base OS

- failure to load 'prod' configuration

Examples from my Adventures

Solr Server

Apache's Solr has a long relationship with Drupal, causing me to need access to a Solr server from time to time. I have pages and pages of notes about installing Solr, and it's time consuming every time.

This is a great example of when an off-the-shelf image from Docker Hub is in order. I'm not actually interested in the nuances of the Lucene version and shit, I just need a working Solr sever so I can continue developing my application. Save yourself some time and check out what pre-built images might satisfy your situation.

OpenCV environment

If you want to play around with image processing ( I know I do! ) you'll quickly find yourself in need of an environment with a current version of OpenCV installed.

The internet is full of 'build scripts' for various versions, all varying slightly in OpenCV version number, and geared either towards Python or a C++ workflow. Installation is moderately complex and involves a compiling step that can a long time to complete. By translating one of these scripts into a Dockerfile, you can ensure that your preferred environment can be consistently re-created.

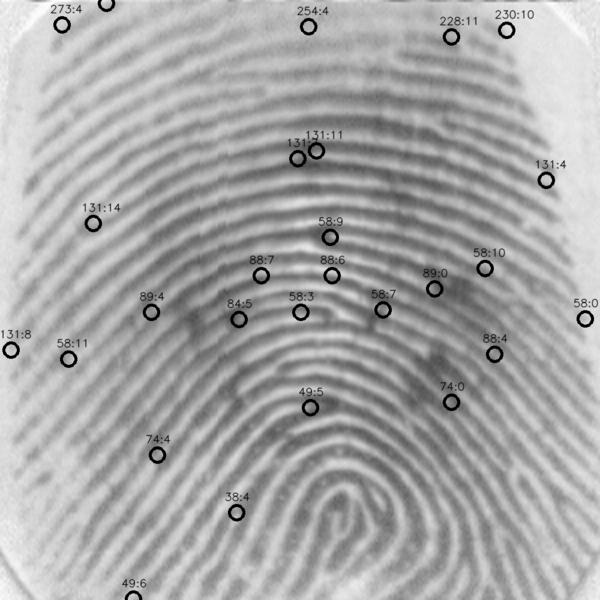

My attempt at identifying bifurcations

Stay tuned for a future post on my foray into OpenCV.

CoreNLP server

At one point I was really interested in chatbots and how language processing is used in AI. This is a quest that often leads one to learn about CoreNLP ( NLP for Natural Language Processing ), which is something developed and open-sourced by Stanford.

This is another 'non-trivial' install, including an f-load of language model files that contain the language-specific secret sauce. By putting the steps of installation into a Dockerfile, you'll isolate the dependencies and avoid having to clean up later. On the same note, it should make upgrading to a new version easier also.

MQTT Broker

Playing around with IoT? I recently learned about MQTT, a bitchingly fast messaging protocol designed for devices with limited resources. A broker is the central hub of a star network topologoy, recieving and relaying the devices' messages around based on all the pub/sub rules defined.

Under ideal circumstances the throughput is many thousands of messages per second, even with a high number of clients.

For development purposes ( and small applications ), this is a great example of a service that can live inside a container and be accessible only via its specific port.

Note: The alternative here is to use a cloud hosted broker, like HiveMQ. I think there is a free tier, and the logging/search/analytics interfaces are very useful.

Blender-Python Development

Back in college I thought I was pretty crafty with 3D modeling, at the time Maya and 3D Studio Max were the popular tools. Later I learned about Blender, something I didn't have to pirate because it's open source.

Recently I wanted to learn about scripted 3D modeling and remembered that Blender is essentially written in Python, so it has a very literal Python API. With the right development envionment, the amount of things that can be done in a scipt is endless: modeling, texturing, lighting, camera moves.

So again, this is the kind of environment that's nice to define in a Dockerfile and run inside a container. It'll keep your host system clean, and it's really easy to move to another computer ( like one Dockerfile easy ).

The List Goes On: Drupal 8, Zoneminder

You get it. Just use your imagination.

Practical Examples

Ok, time to show some practical examples. I'm going to use the official nginx image, but these concepts should translate well to other use cases.

Let's pretend that you have a simple static website to host. You heard that nginx is sweet, but you don't want to install it on your workstation. ( Maybe you already have it installed but want to try something experimental )

First, pull the official image from docker hub ( I'm assuming you have Docker installed ):

$> docker pull nginx

Keep in mind, this is going to download an image, not a Dockerfile. That explains why the file size is so much larger. Once this is done downloading, you can confirm it has been added to your list of locally available docker images like:

$> docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest 7f70b30f2cc6 8 days ago 109 MB

Next, we'll run this image to create a container. The --name option is passed to assign a semantic name "nginx-fun". The -d flag indicates detached ( runs in the background ), and the last bit is the name of the image that we pulled.

$> docker run --name nginx-fun -d nginx

11ebe4096d2c3942bf98dd....

The output is a unique ID, which can also be used to identify the container. Confirm it's running with:

$> docker ps -a

Among the output you'll be able to see that the container's status is "UP" and for how long.

So far this container is kinda boring. It doesn't contain the app code we intended to run, and is inaccessible as a web server from the host.

To cleanup (remove) the container, it first needs to be stopped:

$> docker stop nginx-fun

Checking the output of docker ps -a will now show the status as 'Exited'. ( The -a flag will show all containers - including those that are not running )

Now the container can be deleted using the rm command:

$> docker rm nginx-fun

Volumes

The simplest way to share files between your host environment and what's happening in the container is to mount a voulme, which feels kind of like symlinking directories between the host and container. The -v flag defines the relationship between the directories as /path/on/host:/path/in/container

$> docker run --name nginx-fun -v /home/anthony/site:/usr/share/nginx/html:ro -d nginx

To confirm that our code got mounted into the container, we can take a peek inside by running /bin/bash interactively :

$> docker exec -it nginx-fun /bin/bash

<-- we drop into the container -->

root@21e27d94ade9:/# cat /usr/share/nginx/html/index.html

<-- this should be your index.html -->

You can escape the container's shell using exit

While this web server is technically up and running, it still can't be accessed because the container's port 80 isn't being forwarded to the host. Let's take care of that next. Don't forget to clean up after yourself:

$> docker stop nginx-fun && docker rm nginx-fun

NOTE: Yes, of course there are fully-featured apps like Portainer that provide a UI to all of these same features.

Port Forwarding

In order to forward a port from the container to the host we'll use the -p flag which defines a host:container relationship of ports:

docker run --name nginx-fun -v /home/anthony/site:/usr/share/nginx/html:ro -d -p 8080:80 nginx

Finally, we should be able to see the running app on the host's port 8080.

Note: When you're done, remember to cleanup. Don't forget to remove the nginx image using docker rmi nginx

Conclusion

These basic concepts are enough to get started.

In the future I plan to address some more advanced use cases:

- building your own Dockerfiles

- networking multiple services together using docker-compose

- permissions ( remember how the container user was root? )

- managing containers on remote machines using docker-machine

Don't hesitate to integrate containerization into an upcoming project. I hope it will bring more sanity into your workflow.